Learning High-Level Navigation Strategies via Inverse Reinforcement Learning: A Comparative Analysis

Michael Herman, Tobias Gindele, Jörg Wagner, Felix Schmitt, Christophe Quignon, Wolfram Burgard

29th Australasian Joint Conference on Artificial Intelligence 2016, Hobart, Australia, December 5 – 8, 2016

Abstract:

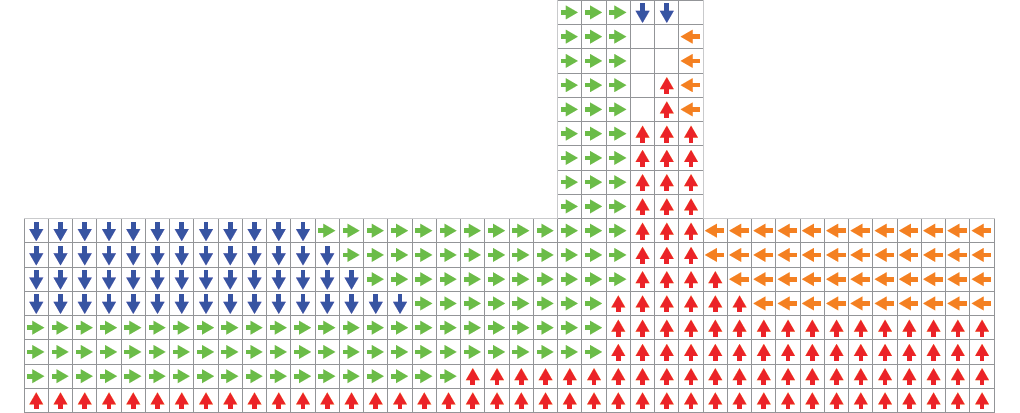

With an increasing number of robots acting in populated environments, there is an emerging necessity for programming techniques that allow for efficient adjustment of the robot’s behavior to new environments or tasks. A promising approach for teaching robots a certain behavior is Inverse Reinforcement Learning (IRL), which estimates the underlying reward function of a Markov Decision Process (MDP) from observed behavior of an expert. Recently, an approach called Simultaneous Estimation of Rewards and Dynamics (SERD) has been proposed, which extends IRL by simultaneously estimating the dynamics. The objective of this work is to compare classical IRL algorithms with SERD for learning high level navigation strategies in a realistic hallway navigation scenario solely from human expert demonstrations. We show that the theoretical advantages of SERD also pay off in practice by estimating better models of the dynamics and explaining the expert’s demonstrations more accurately.

@INPROCEEDINGS{Herman2016AI,

author={Michael Herman and Tobias Gindele and J\"org Wagner and Felix Schmitt and Christophe Quignon and Wolfram Burgard},

booktitle={Australasian Joint Conference on Artificial Intelligence},

title={Learning high-level navigation strategies via inverse reinforcement learning: A comparative analysis},

year={2016},

month={December},

}