Functionally Modular and Interpretable Temporal Filtering for Robust Segmentation

Jörg Wagner, Volker Fischer, Michael Herman, Sven Behnke

29th British Machine Vision Conference, 2018, Newcastle upon Tyne, England, September 03 – 06, 2018

Abstract:

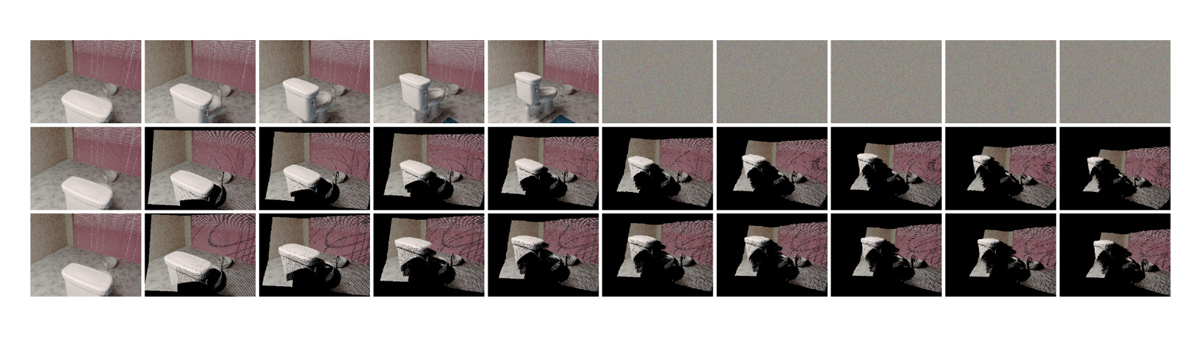

The performance of autonomous systems heavily relies on their ability to generate a robust representation of the environment. Deep neural networks have greatly improved vision-based perception systems but still fail in challenging situations, e.g. sensor out-ages or heavy weather. These failures are often introduced by data-inherent perturbations, which significantly reduce the information provided to the perception system. We propose a functionally modularized temporal filter, which stabilizes an abstract feature representation of a single-frame segmentation model using information of previous timesteps. Our filter module splits the filter task into multiple less complex and more inter-pretable subtasks. The basic structure of the filter is inspired by a Bayes estimator consisting of a prediction and an update step. To make the prediction more transparent, we implement it using a geometric projection and estimate its parameters. This additionally enables the decomposition of the filter task into static representation filtering and low-dimensional motion filtering. Our model can cope with missing frames and is trainable in an end-to-end fashion. Using photorealistic, synthetic video data, we show the ability of the proposed architecture to overcome data-inherent perturbations. The experiments especially highlight advantages introduced by an interpretable and explicit filter module.

@INPROCEEDINGS{Wagner2018BMVC,

author={Jörg Wagner and Volker Fischer and Michael Herman and Sven Behnke},

booktitle={29th British Machine Vision Conference (BMVC)},

title={Functionally Modular and Interpretable Temporal Filtering for Robust Segmentation},

year={2018},

month={September},

}